2. Uniparametric models#

Consider a random variable \(Y\), whose likelihood, when it takes the value \(y\) and the parameters \(\theta\) take the value \(t\), is given by

For example, if \(Y\) is a variable with a normal distribution with mean \(\theta\) and unit variance, then

In the Bayesian argot, it is usual to abuse of the notation, sometimes even dangerously, for example

would be written as

2.1. Notation#

Before moving forward, it is good to introduce at this point the notation that we are going to use throughout this book. However, sooner than later, we are going to follow the abuse of notation usual in the field of Bayesian statistics.

Random variables: \(Y, \theta\).

Sample space: \(\mathcal{Y}\), \(Y\in\mathcal{Y}\).

Parametric space: \(\Theta\), \(\theta\in\Theta\).

Random sample: \(\mathbf{Y}=(Y_1,\ldots,Y_n)\).

Observed sample: \(\mathbf{y}=(y_1,\ldots,y_n)\).

Prior distribution or a priori: \(p(\theta)\).

Likelihood: \(p(Y|\theta)\).

Likelihood of the sample: \(p(\mathbf{Y}|\theta)\).

Evidence: \(p(\mathbf{Y})\).

Posterior distribution or a posteriori: \(p(\theta|\mathbf{Y})\).

2.2. Posterior distribution#

The prior distribution of probability is the distribution based on the previous information (expertise of specialists, historical data, etc.), prior to obtain new measurements. Then, we get new data (we obtain evidence) and combine this new information with the prior distribution using Bayes’ rule to obtain the posterior distribution of probability:

With the usual abuse of notation of Bayesian statistics, the previous result would be written as:

or, equivalentely

2.3. Beta-Binomial#

Assume that \(Y_i|\theta\overset{iid}{\sim}\text{Bernoulli}(\theta)\) and the uncertainty about \(\theta\in (0,1)\) is quantified through \(\theta\sim\textsf{Beta}(\alpha, \beta)\). We get the observations \(\mathbf{y}=(y_1,\ldots,y_n)\), then

and

Thus,

that is,

Remember that if \(Y_1\ldots,Y_n|\theta\overset{iid}{\sim}\textsf{Bernoulli}(\theta)\), then

Therefore, if

we conclude that

2.3.1. Example#

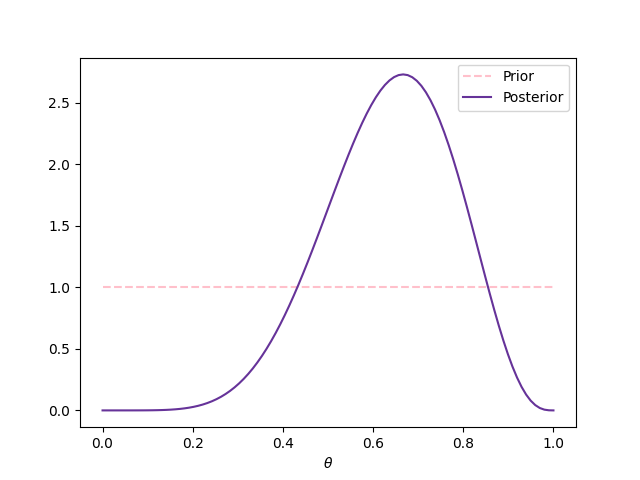

Assume that \(Y|\theta\sim\textsf{Bernoulli}(\theta)\) and that we don’t have information about \(\theta\) to prefer some value over another, so we model \(\theta\sim\textsf{Beta}(1,1)\) (i.e. \(\theta\sim\textsf{Uniform}(0,1)\)). Then, we get a simple random sample (i.e. we obtain an iid sample) \(\mathbf{y}=(1,0,1,1,1,0,1,0,1)\), or equivalently \(z=6\) and \(n=9\). Next figure shows the prior and posterior distributions.

2.4. Principle of indifference#

Analyzing the binomial model, Laplace assumed the uniform distribution as prior arguing what he called the principle of indifference, also called principle of insufficient reason, which stablishes that the uniform assumption is appropriate when we have no information about a parameter.

For the binomial likelihood, this means that

2.4.1. Proportion of girls’ births#

One of the first applications of this model made by Laplace, was the estimation of the proportion of girls’ births, \(\theta\), in a population. Laplace knew that between 1745 and 1770, 241945 girls and 251527 boys were born in Paris, if \(Y\) denotes the number of girls’ births, then

With this result, we can show that it is more probable that a boy is born than a girl, as it is shown in the next cell, which calculates \(\mathbb{P}(\theta>0.5|Y)\).

from scipy.stats import beta

fem_births = 241945

mal_births = 251527

beta.sf(0.5, fem_births+1, mal_births+1)

1.1460584901546728e-42

2.4.2. Probability of a girl birth given placenta previa#

Note

This example was taken from [GCS+13]

Placenta previa is an unusual condition of pregnancy in which the placenta is implanted low in the uterus. An early study concerning the sex of placenta previa births in Germany found that of a total of 980 births, 437 were female.

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from scipy.special import logit, expit

births = 987

fem_births = 437

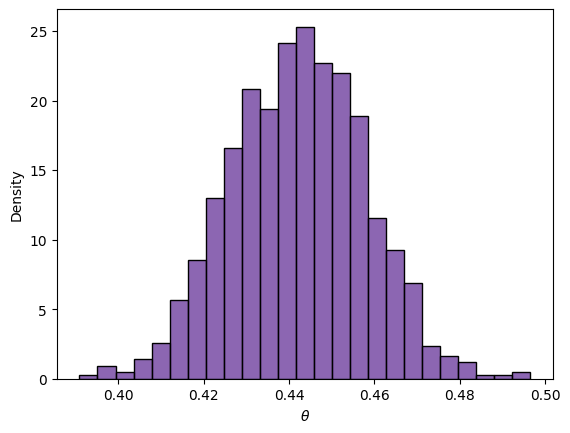

Let be \(\theta\) the probability of a girl’s birth given placenta previa. With the previous data, we can calculate the posterior distribution. Once we have this posterior, we can make different inferences about the parameter \(\theta\). For example, next cell calculates the posterior mean of \(\theta\).

# Posterior mean

beta.mean(fem_births+1, births-fem_births+1).round(3)

0.443

We can give an interval of probability, next cell gives an interval of approximately 0.95 posterior probability, assuming a normal approximation to the posterior, we calculate the interval taking the mean minus/plus two times its standard deviation.

# Posterior interval

LowInterval = beta.mean(fem_births+1, births-fem_births+1) - 2 * beta.std(fem_births+1, births-fem_births+1)

UppInterval = beta.mean(fem_births+1, births-fem_births+1) + 2 * beta.std(fem_births+1, births-fem_births+1)

round(LowInterval,3), round(UppInterval,3)

(0.411, 0.474)

We can also simulate a sample from the posterior to make inferences

PosteriorSample = beta.rvs(size=1000, a=fem_births+1, b=births-fem_births+1)

sns.histplot(PosteriorSample, color='rebeccapurple', stat='density')

_ = plt.xlabel(r'$\theta$')

np.quantile(PosteriorSample, [0.025, 0.975]).round(3)

array([0.413, 0.472])

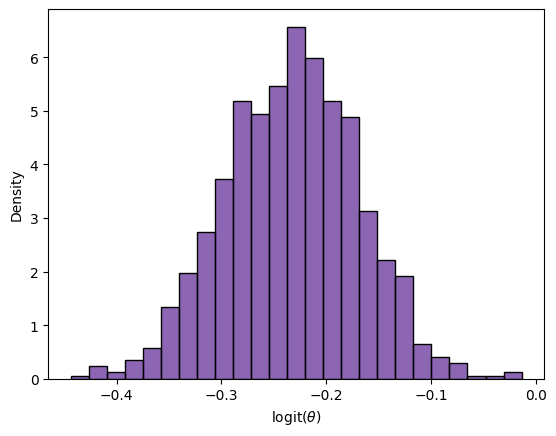

The normal approximation is generally improved by applying it the logit transformation, \(\log (\frac{\theta}{1-\theta})\), which transforms the parameter space from the unit interval to the real line.

LogitPosteriorSample = logit(PosteriorSample)

sns.histplot(LogitPosteriorSample, color='rebeccapurple', stat='density')

_ = plt.xlabel(r'logit$(\theta)$')

LowLogitInterval = LogitPosteriorSample.mean() - 2 * LogitPosteriorSample.std()

UppLogitInterval = LogitPosteriorSample.mean() + 2 * LogitPosteriorSample.std()

expit(LowLogitInterval).round(3), expit(UppLogitInterval).round(3)

(0.411, 0.474)

2.4.3. Cells production of protein#

Note

This example was taken from the notes of Bayesian Statistics of J. Andrés Christen.

Assume that a particular population of cells can be in one of three states of protein production: A, B and C, corresponding to low, mid and high production, respectively. If the population is in the state A, we expect that 20% of cells are producing the protein, if it is in the state B we expect 50% and if it is in state C we expect 70%.

We take a random sample of 20 cells and verify if each one of them is in production of the protein (the result of the test for each cell is 1 if it is in production and 0 if it is not). In this sample, we found that 12 cells were in production and the rest were not. What is the probability that the population is in each one of the states?

The next cells show two ways to find the answer to the question.

Show code cell content

theta_A = 0.2

theta_B = 0.5

theta_C = 0.7

print(f"Prob. A: {theta_A**12 * (1-theta_A)**8 / (theta_A**12 * (1-theta_A)**8 + theta_B**12 * (1-theta_B)**8 + theta_C**12 * (1-theta_C)**8):.4f}")

print(f"Prob. B: {theta_B**12 * (1-theta_B)**8 / (theta_A**12 * (1-theta_A)**8 + theta_B**12 * (1-theta_B)**8 + theta_C**12 * (1-theta_C)**8):.4f}")

print(f"Prob. C: {theta_C**12 * (1-theta_C)**8 / (theta_A**12 * (1-theta_A)**8 + theta_B**12 * (1-theta_B)**8 + theta_C**12 * (1-theta_C)**8):.4f}")

Prob. A: 0.0004

Prob. B: 0.5120

Prob. C: 0.4876

Show code cell content

from scipy.stats import binom

p_A = binom.pmf(12, 20, theta_A)

p_B = binom.pmf(12, 20, theta_B)

p_C = binom.pmf(12, 20, theta_C)

print(f"Prob. A: {p_A/(p_A+p_B+p_C):.4f}")

print(f"Prob. B: {p_B/(p_A+p_B+p_C):.4f}")

print(f"Prob. C: {p_C/(p_A+p_B+p_C):.4f}")

Prob. A: 0.0004

Prob. B: 0.5120

Prob. C: 0.4876

2.5. Predictive distributions#

In many cases we are more interested in the behavior of future observations of the phenomenon than on some vector of parameters \(\theta\). Usually, in frequentist statistics we solve this problem using a punctual estimator of \(\theta\) based on the observed sample, \(\hat{\theta}\), who is later plug in \(p(Y|\theta)\), that is, we use \(p(Y|\hat\theta)\) to predict the behavior of future observations.

In Bayesian statistics the problem is solved marginalizing the joint distribution of \(\theta\) and \(Y\).

2.5.1. Prior predictive distribution#

Thus, the prior predictive distributions can be caculated as

2.5.2. Posterior predictive distribution#

Once we obtain a sample \(\mathbf{Y}\), it induced a joint distribution for \(Y\) and \(\theta\) conditional on the sample,

Thus, the posterior predictive distribution can be calculated as

2.5.3. Rule of succession#

Consider the Beta-Binomial model, and the prior \(\theta\sim\textsf{Beta}(1,1)\), thus the posterior distribution of \(\theta\) is given by

Moreover, remember that if \(\theta\) is a random variable with a distribution \(\textsf{Beta}(\alpha,\beta)\), then

so

Assume that we want to know the probability that a new Bernoulli observation \(\tilde{Y}\) takes the value of 1, that is we want to know the posterior predictive \(\mathbb{P}(\tilde{Y}=1|Y)\).

If, for example, we have made \(n\) times a Bernoulli experiment without any success (\(Y=0\)), the probability of having success the next time is \(1/(n+2)\), while the probability of success estimated with classic probability is \(0/n=0\).

2.6. Determining the hyperparameters in the Beta-Binomial model#

One of the challenges to solve when we use Bayesian statistics is to determine the parameters of the prior distribution, which are called hyperparameters. One way to solve this problem is by interpreting the hyperparameters, and then to determine the most appropriate values for them.

For example, consider the model Beta-Binomial, in which the likelihood is given by

where \(a\) is the numer of successes and \(b\) is number of fails.

On the other hand, the prior distribution is given by

Comparing these two expressions, we conclude that \(\alpha-1\) is interpreted as the number of successes a piori and \(\beta-1\) as the number of fails a priori.

Therefore, if we haven’t made any experiment previously, we can set \(\alpha=1\) and \(\beta=1\). This would mean that \(\theta\sim\textsf{Uniform}(0,1)\), which coincides with the principle of indifference.

2.7. Normal convergence of the Beta-Binomial model#

We know that the Beta-Binomial model satisfies that

Moreover, remember that if \(\theta\) is a random variable with distribution \(\textsf{Beta}(\alpha,\beta)\), then

and

Thus, we have that

and

Note that when \(n\to\infty\), then \(Y\to\infty\) and \(n-Y\to\infty\), always that \(\theta\in(0,1)\). Therefore, when \(n\to\infty\) the value of the hyperparameters are negligible, and

and

On the other hand, by central limit theorem and Slutsky’s theorem, we know that

Analogously, it is satisfied that

That is, the posterior distribution converges to a normal random variable.

2.8. Inference of a fair or a biased coin#

Note

This example was taken from [EGP23].

Consider a box with two coins: one fair and one biased. Assume that the biased coin is built in such way that there is a probability of 3/4 of showing “head”. One person takes one coin (not necessarily at random) and starts flipping it.

In this case, we can define the measurable space as \(\Omega=\{\text{the coin shows head}, \text{the coin shows tail}\}\) and take as the sigma-algebra the power set of \(\Omega\), \(\mathcal{P}(\Omega)\). In this space, we define the random variable

Implicitly, we are not considering all other possible results, like the case where the coin lands vertically, that we cannot determine the result, etc.

Thus, our parametric space is \(\Theta=\left\lbrace\frac{3}{4}, \frac{1}{2}\right\rbrace\) and our sample space is \(\mathcal{Y}=\{0,1\}\).

We proceed now to calculate all the distributions presented in the Bayesian framework, beginning with the distributions that can be stablished prior to having access to a sample.

2.8.1. Prior distribution#

\(\mathbb{P}\left(\theta=\frac{3}{4}\right)=\alpha\), \(\mathbb{P}\left(\theta=\frac{1}{2}\right)=1-\alpha\), with \(\alpha\in(0,1)\). This prior distribution can be written in one line as

Note that it depends on the hyperparameter \(\alpha\) which is interpreted as the probability of choosing the biased coin. Note also, that we do not allow it to take the extreme values of 0 or 1, in such cases we would know without uncertainty what coin was the chosen one, also if \(\alpha=0\) or \(\alpha=1\) we would face problems when we want to calculate some distributions.

2.8.2. Likelihood#

We have the random variable \(Y|\theta\sim\textsf{Bernoulli}(\theta)\), thus the likelihood of our model is

2.8.3. Prior predictive distribution#

That is,

2.8.4. Likelihood of the sample#

Let be \(Y_1,\ldots,Y_n|\theta\overset{iid}{\sim}\textsf{Bernoulli}(\theta)\), then

2.8.5. Evidence#

2.8.6. Posterior distribution#

Now that we know the likelihood evaluated in the sample, the prior and the evidence, we can calculate the posterior distribution of \(\theta\).

Let be \(v=\frac{\alpha}{1-\alpha}\left(\frac{3^{\sum_{i=1}^n y_i}}{2^n}\right)\), then

that is

and

2.8.7. Posterior predictive distribution#

that is

Likelihood and likelihood of the sample

In this book I make the distinction between the likelihood and the likelihood of the sample. However, in the Bayesian literature this is not the rule but the exception, most of the Bayesian literature call both functions simply as likelihood. I have seen that students that face for the first time Bayesian statistics can be confused about which likelihood they should use when this distinction is not done. I don’t complain them, the first time I was confused as well.

2.9. There is no free lunch#

When we make inference using frequentist statistic the procedures are usually justified by an asymptotic analysis of the method. As a consequence, its performance for small samples is questionable. Meanwhile, the Bayesian statistic is valid for any sample size. This doesn’t mean that having more data is useless, but the opposite. The price to pay for this power is the dependence on the prior information. A non-reliable prior distribution compromises the results.

Historical discussion about the prior

Historically, some detractors of the Bayesian statistics have argumented about the arbitrariness of the prior distribution. It is true that prior distributions can be flexible enough to code different types of information. Then, if the prior can be anything, isn’t it possible to obtain any answer that you want? The answer is yes.

However, the likelihood is also a subjective model that is impose by the agent, and eventually with a large sample the effect of the prior distribution would be eventually buried. Thus, if your objective is to modify the results is easier modifying the likelihood.